Machine Learning

Explore a brief overview of my projects in machine learning.

Background

Neural networks are interconnections of neurons through which information propagates to convert a set of inputs into an output.

Biological neural networks have been incredibly successful in the natural world, as shown by how they are responsible for virtually all information processing in animals (including humans). Inspired by the versatility and robustness of biological neural networks, artificial neural networks (ANNs) have become a forefront in machine learning research by using mathematical models to approximate the functioning of their biological counterparts. Despite being heavily simplified versions of biological neural networks, ANNs have brought on significant technological innovation, from beating world record GO players to powering advanced image recognition software.

Given their notable impact on research and innovation, I created several projects to apply neural networks and understand the mathematics behind them. Projects 1-4 were all programmed using only the core matrix mathematics behind neural networks without using machine learning (ML) libraries.

Project #1 – Numpy-only Single Layer Perceptron (2022)

My first ever neural-network project was a single-layer perceptron based on Rosenblatt’s original algorithm. The network was programmed using pure Numpy-based matrix mathematics; no ML libraries were used.

I trained the network on the classic 28×28 MNIST dataset, on which it reached 87.3% accuracy. However, the network architecture limited the maximum accuracy, constrained the network to only one layer, and, as be seen on the figure, resulted in noisy weights.

Weights created by the single-layer perceptron on the MNIST dataset

Project #2 – Numpy-only Hard Coded Networks (2023-2024)

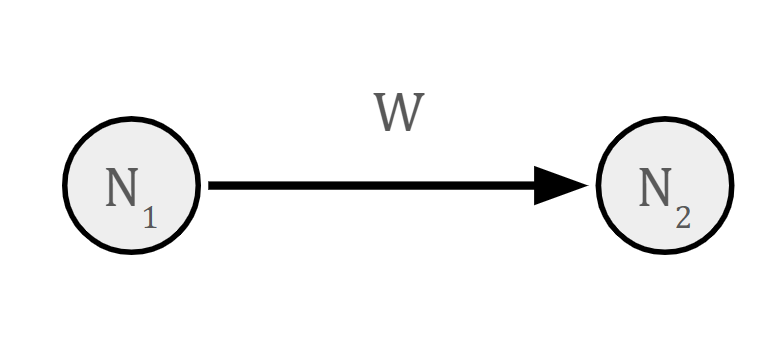

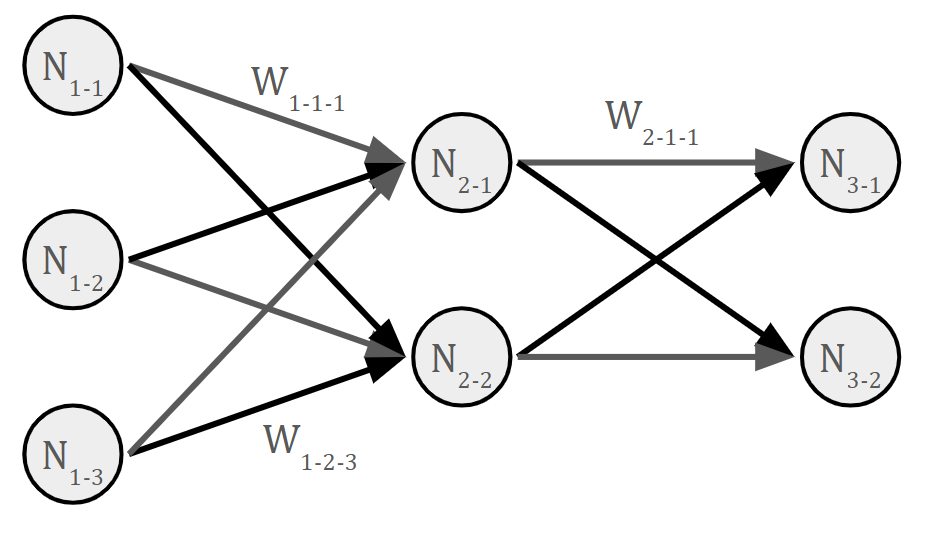

Because I first started working on ANNs in 9th grade, my networks were initially constrained by my knowledge of calculus. Accordingly, after having learnt the basics of differentiation in 2023, I could expand my projects to use the full backpropagation algorithms to program ANNs. This first took the form of a 1×1 ANN, meaning that it had one input node, one output node, and no hidden layers. This was then expanded to hard-coded 2×2, 1×2×2, 2×2×1, etc. networks. The networks were able to converge, had working backpropagation, activation functions, biases, and some used Adam Optimization; they were also programmed from scratch without ML libraries.

Two examples of schematics for the hard-coded networks

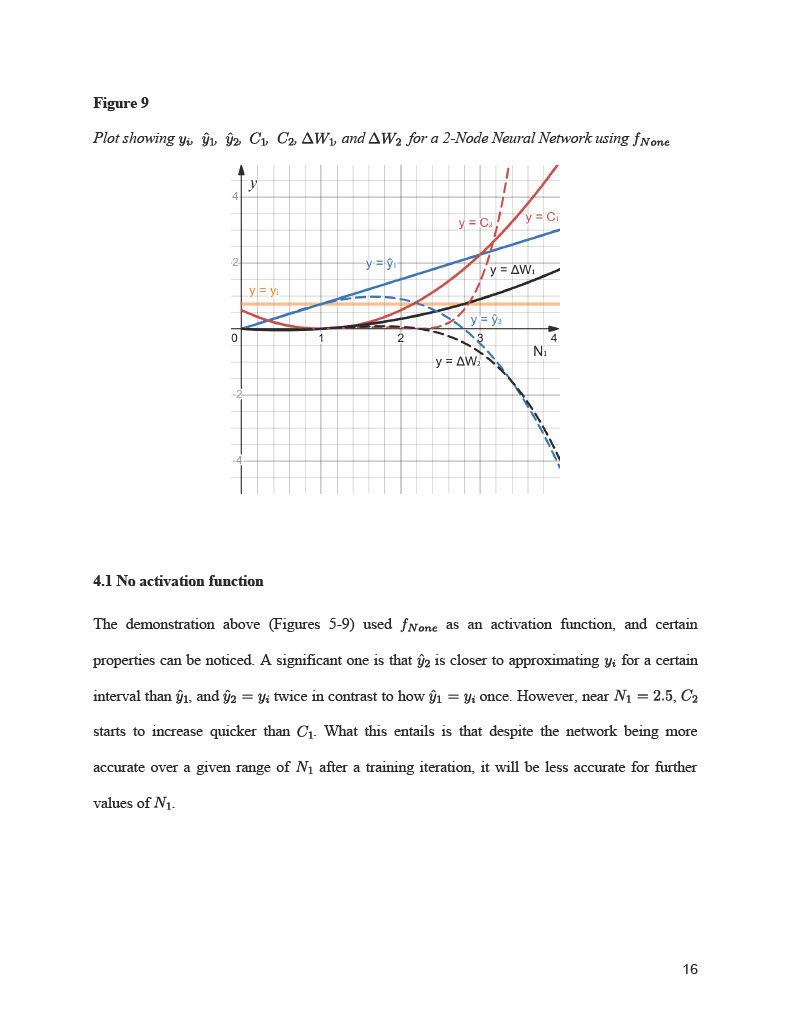

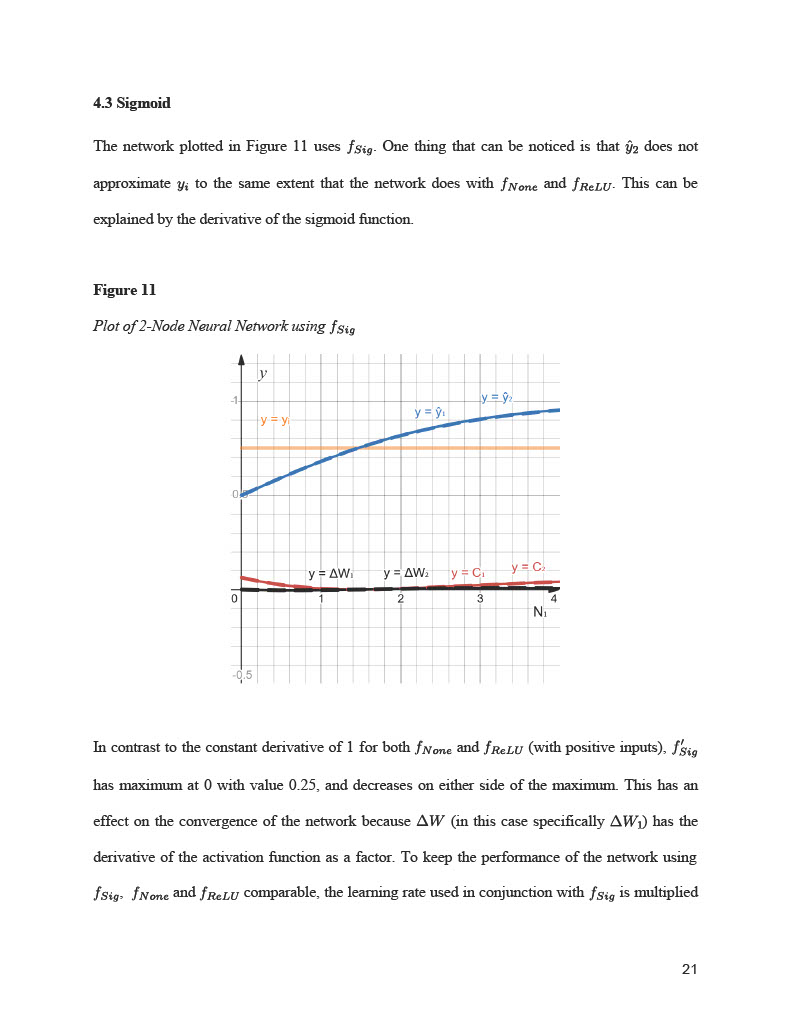

Project #3 – Research Paper on ANN Convergence (2024)

I wrote my IB Extended Essay in mathematics analyzing how different activation functions change the convergence of small neural networks. The paper compared no activation function, ReLU, and Sigmoid, and explained the mathematical background for phenomena such as dying ReLU, exploding gradients, and vanishing gradients.

A 4 page excerpt of the research paper

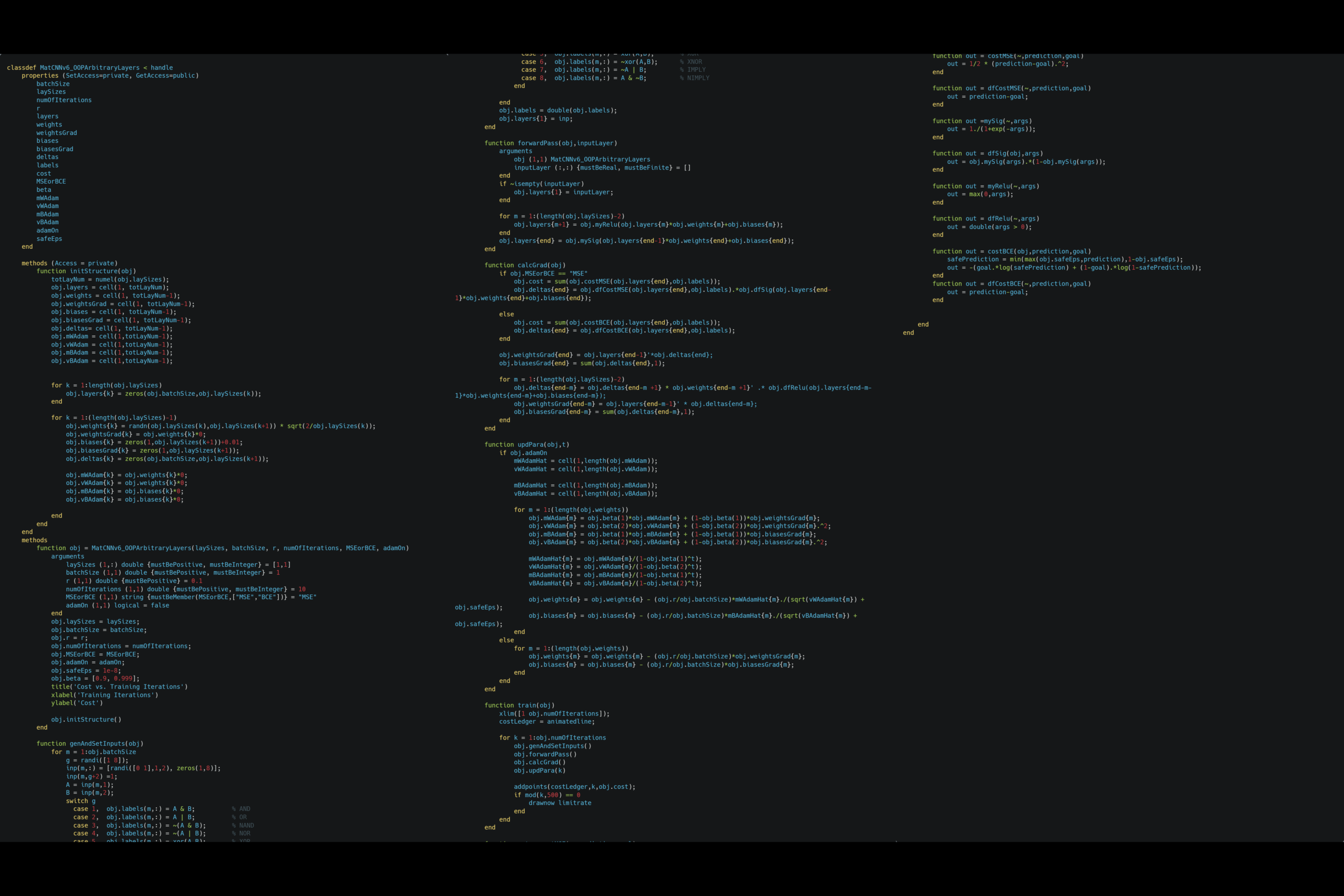

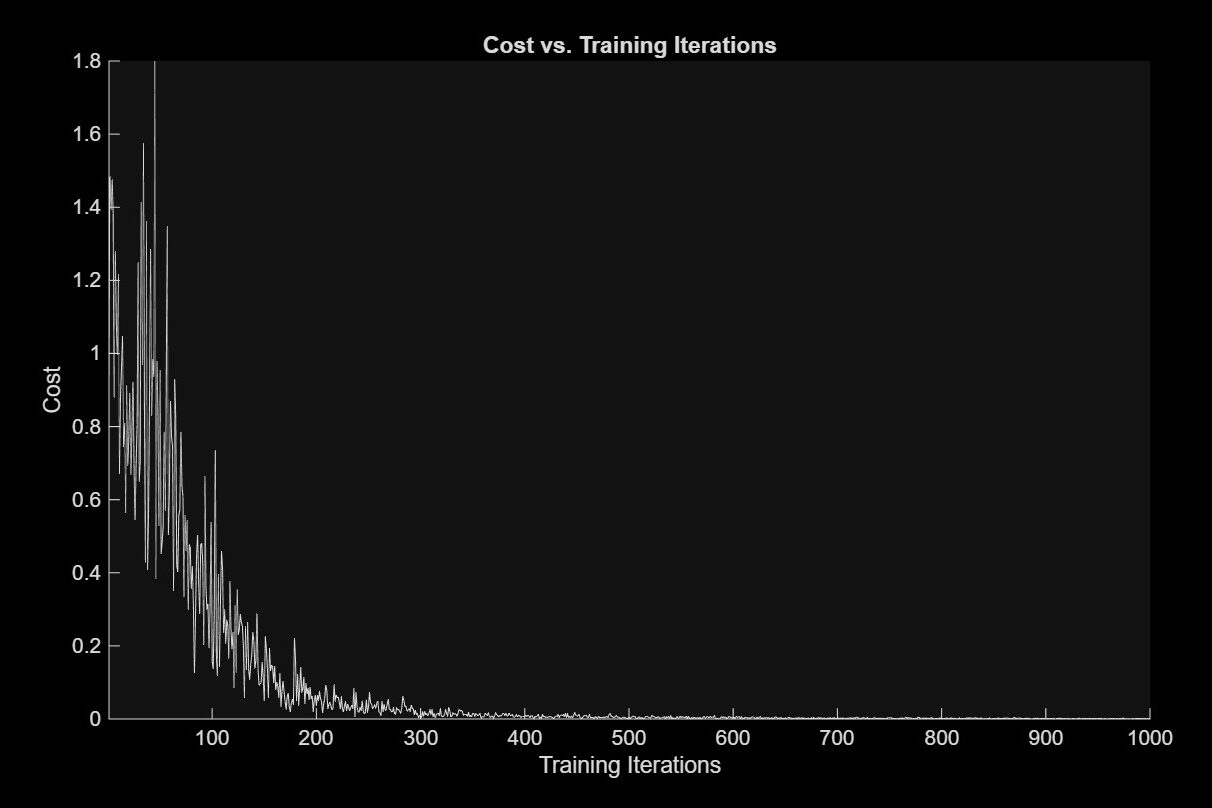

Project #4 – From-scratch N×N Networks (2024-2025)

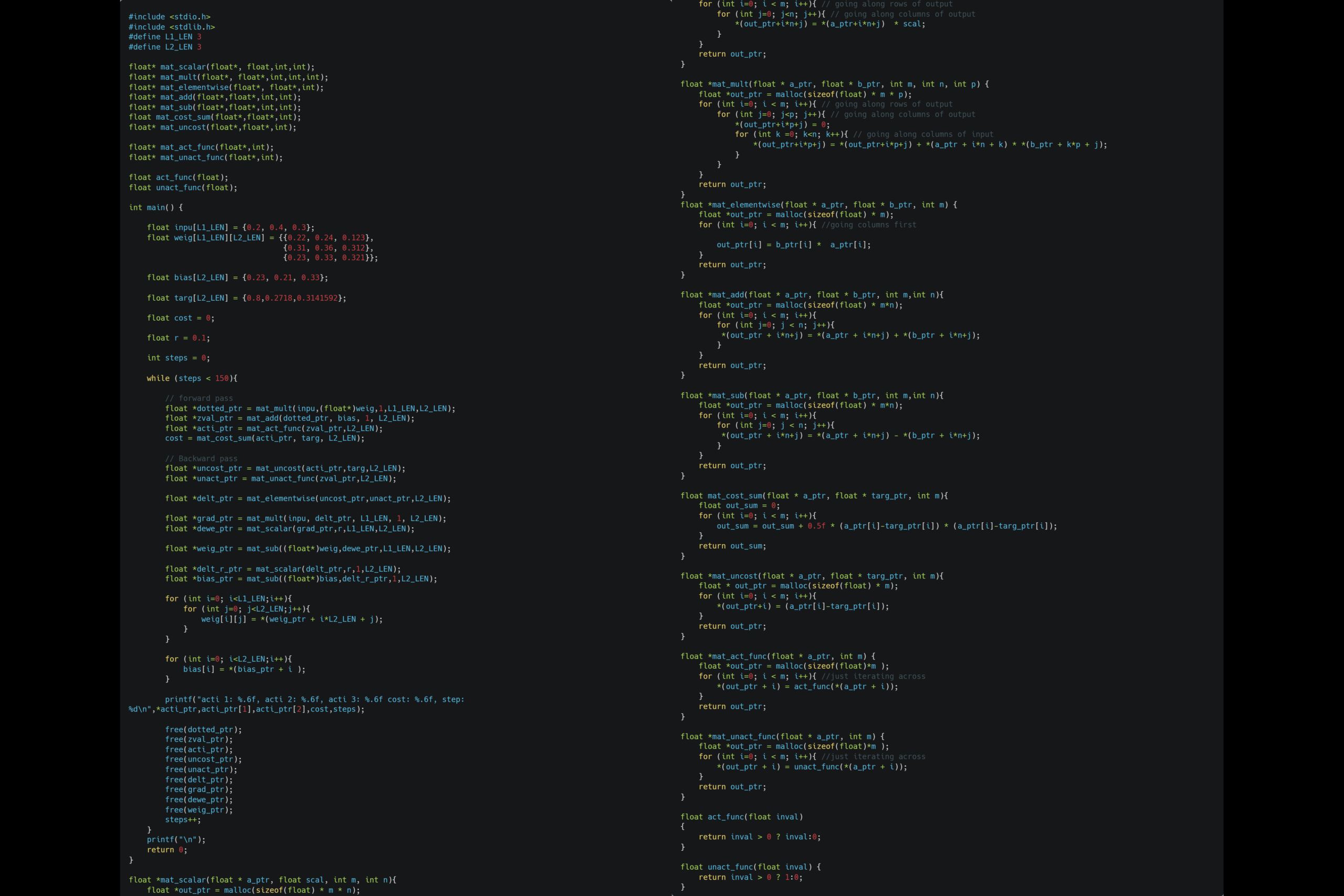

The 4th phase of ANNs I programmed from scratch had an arbitrary number of layers and nodes, MSE/BCE cost, Adam optimization, and arbitrary activation functions. The initial “4th generation” networks were written in Python, after which I created similar versions in C and MATLAB. The project has become my default project to learn a programming language, given it’s combination of linear algebra, plotting, and time-optimization.

Code (left) and cost (middle) of MATLAB network approximating logic gates and ANN code written in C (right).

Project #5 – Applying PyTorch and TensorFlow (2023-2025)

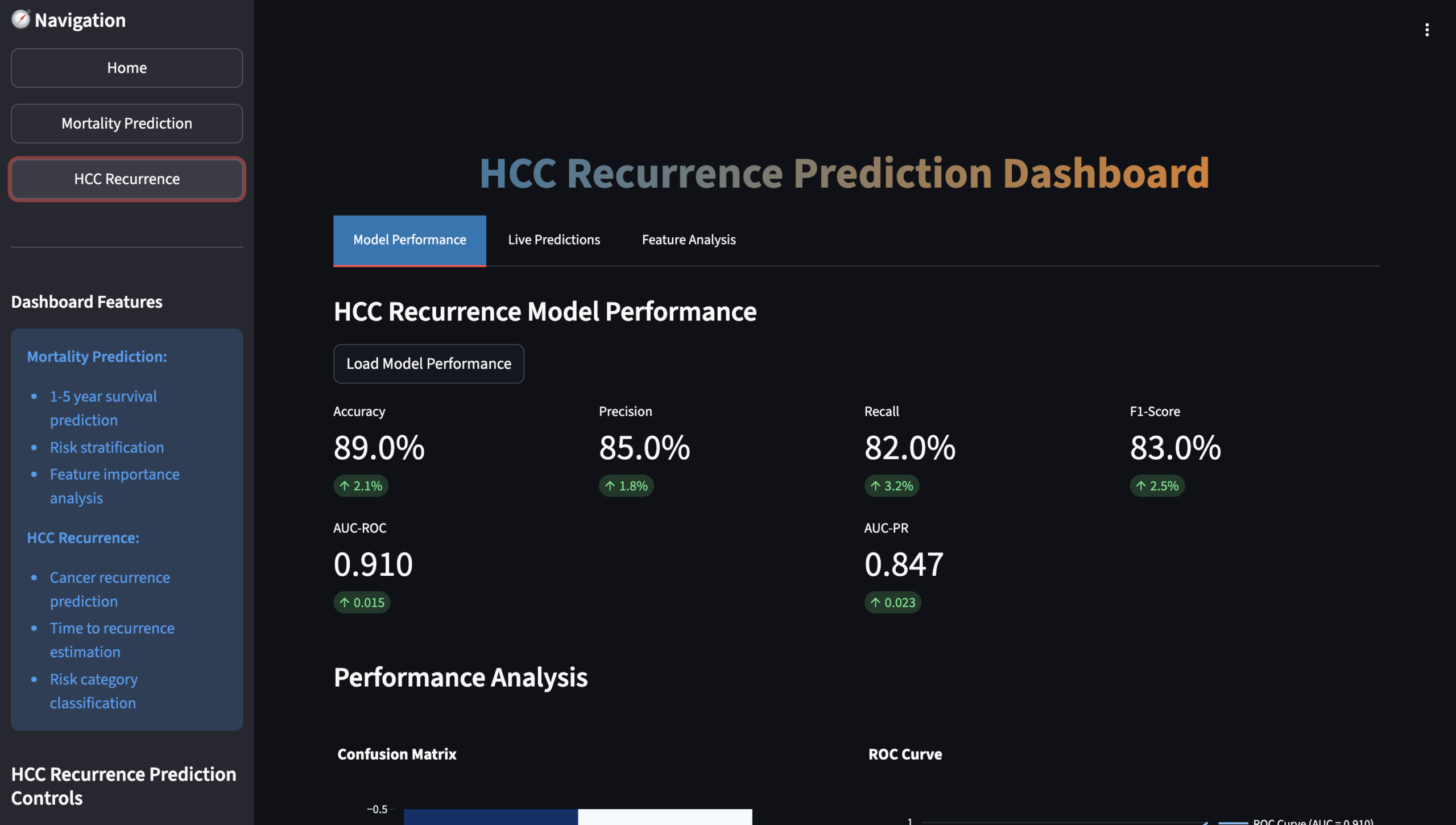

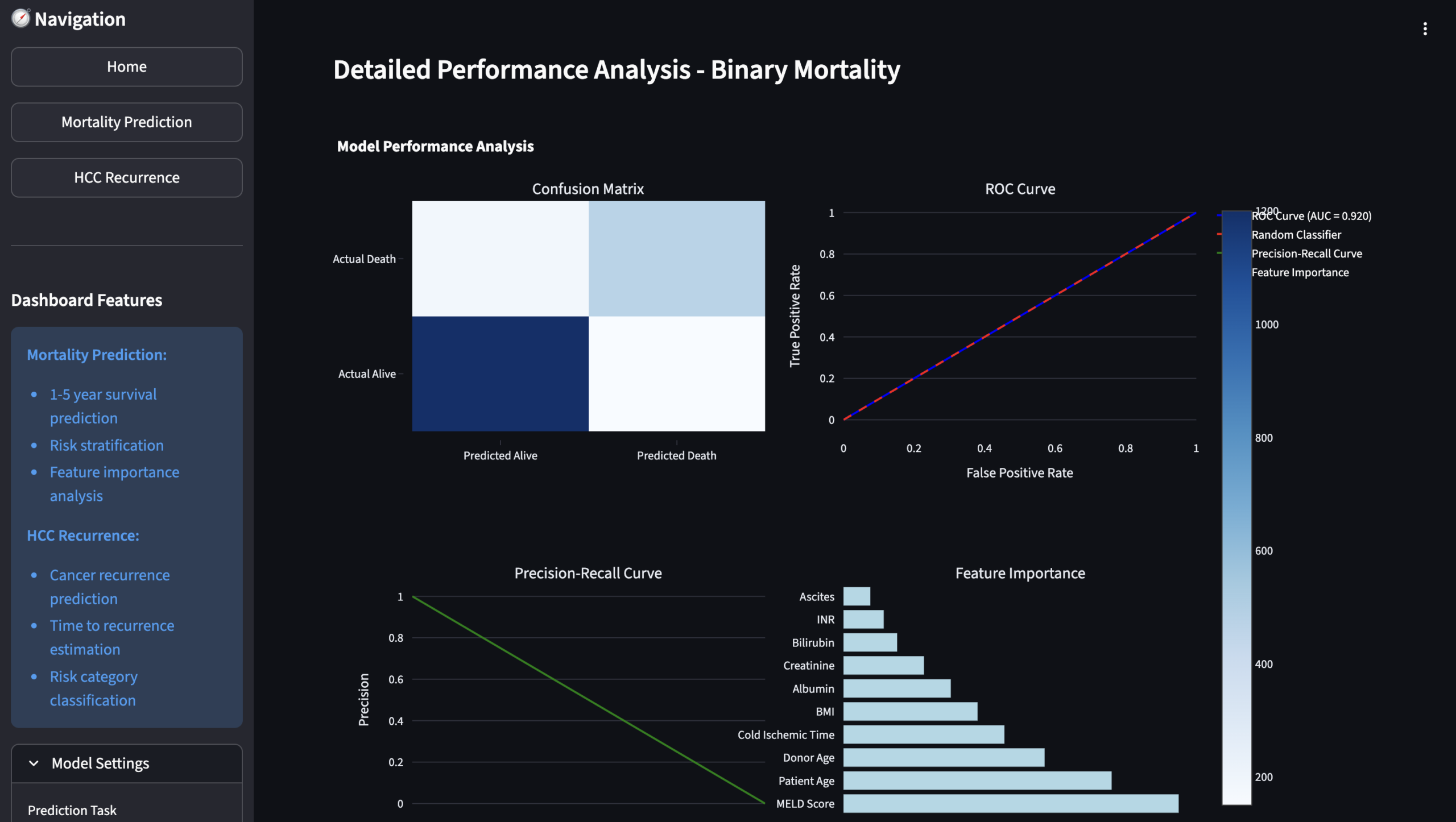

Given my interest in machine learning, I have also used pre-built machine learning libraries for various tasks. For example, the Robot Arm within “Other Projects” on this website used OpenCV to detect fiducials, approximate their location, and follow them. Further projects include using PyTorch NNs on MNIST, using neural networks + linear regression to predict income based off of census data, and using gradient boosted decision trees + TabNets to predict liver transplant success and HCC recurrence. The latter was done in a team for the hackathon HackGT 12.

Image of dashboard made for predicting HCC recurrence

Current & Future Projects

I hope to continue to improve my understanding of networks and other machine learning architectures. Current projects include creating manually implemented RL and RNN networks for agent-like action in robotics. Similarly, better understanding transformers and their applications are further areas of interest.